Creating a quorum disk and fencing for Linux high availability nodes

The quorum disk and fencing on a Linux high availability cluster let its nodes know when they are operational and shut them off if they are not.

Once you create a Linux high availability cluster with the Red Hat High Availability Add-On, you can make it more reliable by adding a quorum disk and fencing.

The cluster uses a quorum disk to determine if it is still part of the majority; for example, if a node loses connection to other nodes in the cluster. By configuring a quorum disk, you can have the node run a few tests to find out if it is still operational.

I/O fencing is used for nodes that have lost connection to the rest of the high availability cluster. Fencing shuts off failing nodes so that resources can start safely somewhere else without risking corruption due to multiple startups.

Configuring quorum disks

The quorum disk is about votes. By default, every node has one vote. If a node sees at least half of the nodes plus one, there is quorum; a node that has quorum has the majority of available votes. An exception exists for two-node clusters, where the cluster could never achieve quorum under those rules during a node shutdown.

Configuring a quorum disk requires shared storage and heuristics. The shared storage device must be accessible to all nodes in the cluster. The heuristics is at least one test that a node has to perform successfully before it can connect to the quorum disk.

If a split-brain situation arises, orphaning a node from the rest of the cluster, the nodes will all poll the quorum disk. If they're capable of performing the heuristics test, the node can count an extra vote to its quorum. If one fails the heuristics test, it won't have access to the vote offered by the quorum disk and will therefore lose quorum and know that it has to be terminated.

For data centers that use shared storage and Red Hat Linux, a quorum disk requires a partition on the shared disk device. Use the mkqdisk Linux utility to mark this partition as a quorum disk. Then, specify the heuristics to use in the LuCI Web management interface.

Use the fdisk Linux utility to create a partition on the shared storage device for one cluster node -- 100 MB should suffice. On the other cluster node, use the partprobe command to update the partition table.

On only one of the nodes, use the command mkqdisk -c /dev/sdb1 -l quorumdisk to create the quorum disk. Before typing this command, make sure to double-check the name of the device.

On the other node, use mkqdisk -L to show all quorum disks. You should see the quorum disk that you've just created, labeled quorumdisk.

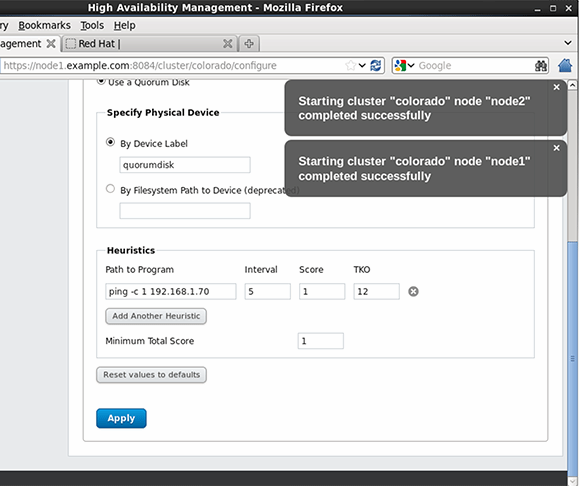

In the LuCI interface, open the Configuration > QDisk tab and select the option "Use a Quorum Disk." To specify which device you want to use, put in the label that you created when you formatted the quorum disk, as in, quorumdisk.

Now, you'll need to specify the heuristics. This is a little test that a node has to be able to perform to access the quorum disk vote. One example is a ping command that pings the default gateway. To specify this test, in the "Path to Program" field, enter ping -c 1 192.168.1.70. The interval specifies how often the test is executed; five seconds is a good value. The score specifies what score this test gives if executed successfully. If you connect several different heuristics tests to a quorum disk, you can work with different scores, but in the case of this example, you can use the score 1. Time to knock out specifies the tolerance for the quorum test. Set it to 12 seconds, which means that a node can fail the heuristics test not more than twice. The last parameter is the minimum total score, which is the score that a node can add when it is capable of executing the heuristics properly.

After creating the quorum device, you can use the cman_tool status command to verify that it works as expected. Look at the number of nodes, which is set to two, and the number of expected nodes, which is set to three. The reason for that can be found in the quorum device votes, which as you'll see is set to one. This means that the quorum device is working.

Listing 1: Use cman_tool status to verify the working of the quorum device.

[root@node1 ~]# cman_tool status

Version: 6.2.0

Config Version: 2

Cluster Name: colorado

Cluster Id: 17154

Cluster Member: Yes

Cluster Generation: 320

Membership state: Cluster-Member

Nodes: 2

Expected votes: 3

Quorum device votes: 1

Total votes: 3

Node votes: 1

Quorum: 2

Active subsystems: 11

Flags:

Ports Bound: 0 11 177 178

Node name: node1

Node ID: 1

Multicast addresses: 239.192.67.69

Node addresses: 192.168.1.80

Setting up fencing

Fencing helps maintain the integrity of the cluster. If the cluster protocol packets that are sent out by the Corosync messaging framework cannot reach another node anymore, before taking over its services, use hardware fencing to ensure that the other node is truly down.

Hardware fencing involves a hardware device terminating a failing node. Typically, a power switch or integrated management card such as HP ILO or Dell Drac serves this purpose.

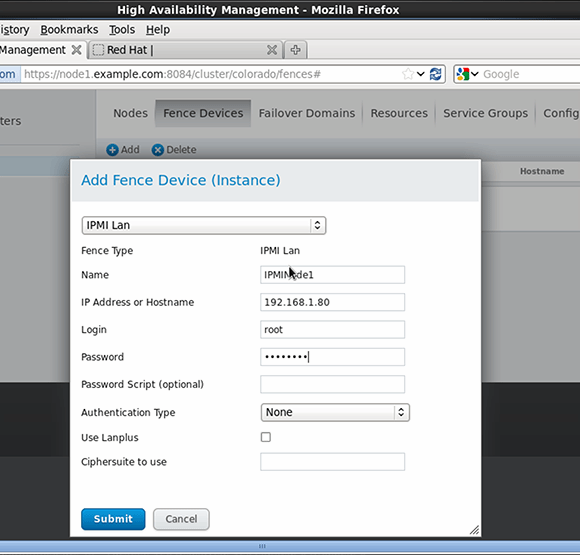

Setting up fencing is a two-step process: configuring the fence devices, then associating those fence devices to the nodes in the network. To define the fence device in the Linux high-availability cluster, open the "Fence Devices" tab in Red Hat's Conga Web-based cluster management interface. After clicking Add, you'll see a list of all available fence devices. A popular fence device type is IPMI LAN, which can send instructions to many integrated management cards.

After selecting the fence device, you'll need to define its properties. These properties are different per fence device, but often include a user name, a password and an IP address. After entering these parameters, you can submit the device to the configuration (see Figure 2).

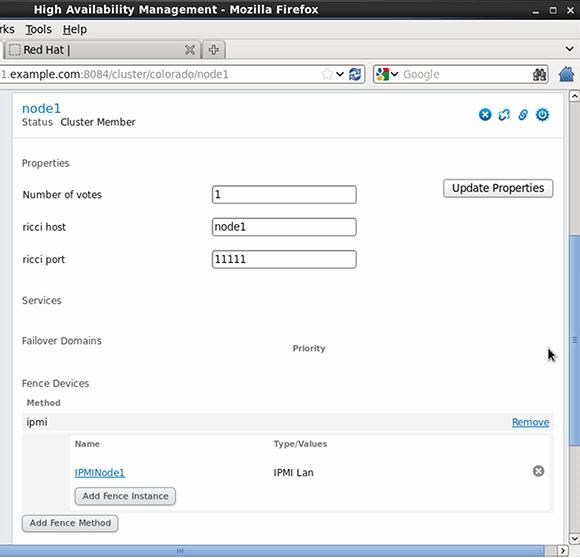

After defining the fence devices, connect them to the nodes via "Nodes" in the LuCI management interface. Once you select a node, scroll through its properties and click the "Add Fence Method" button. Next, enter a name for the fence method you're using and for each method, click Add Fence instance to add the device that you've created. Submit the configuration and repeat this procedure for all nodes in your cluster.

Sander van Vugt is an independent trainer and consultant based in the Netherlands. He is an expert in Linux high availability, virtualization and performance. He has authored many books on Linux topics, including Beginning the Linux Command Line, Beginning Ubuntu LTS Server Administration and Pro Ubuntu Server Administration.