Data center cooling optimization in the virtualized-server world

How many hamburgers can you cook on a blade server? If your servers are literally cooking there are a variety of systems to consider to keep your equipment cool.

It's 2008 and the virtual environment is the new computing paradigm, and software and hardware seem to work together as advertised. The virtual machine appears to have many benefits, including providing better resource utilization and management while saving energy. We have come full circle and are now concentrating more computing power into a single rack. By using high-density 1U servers and Blade Servers, a single rack can have more processing power than an entire midsize mainframe of 10 years ago. However, "virtualization" has not repealed the laws of physics, the hardware is very real, and it requires a lot of energy and cooling resources.

The plain truth about all computers is that they turn every Watt (W) of power directly into heat (and, yes, I know that they also do useful computing). With the advent of the widespread virtualization based on high-performance, multi-core, multiprocessor servers, the amount of power used per square foot has risen from 25 W to 50 W/square foot up to 250 W to 500 W/square foot and continues to rise.

When discussed in terms of watts per rack, in the mid to late 1990s (in the last century) it ranged from 500 W to 1,000 W and occasionally 1 kilowatt to 2 kW. Once we got past the dreaded Y2K frenzy and started moving forward instead of focusing on remediation, the servers got smaller and faster and started drawing more power.

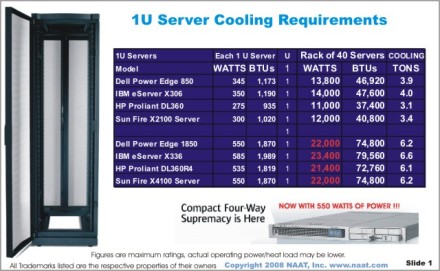

Today a typical 1U server draws 250 W to 500 W, and when 40 of them are stacked in a standard 42U rack they can draw 10 kW to 20 kW and produce 35,000 British thermal units (BTUs) to 70,000 BTUs. This requires 3 to 6 tons of cooling per rack. For comparison, the same amount of cooling typically specified for a 200-square-foot to 400-square-foot room with 10 to 15 racks five years ago. See 1U server cooling requirements table (click image for larger version).

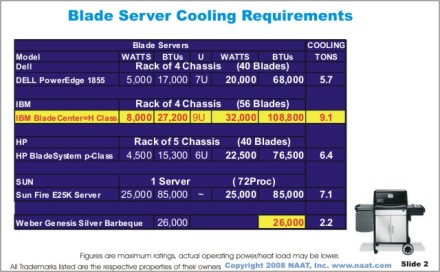

Blade servers provide more space saving benefits, but demand higher power and cooling requirements. These servers can support dozens of multi-core processors, at only 8U to 10U tall -- but require 6 kW to 8 kW each. With 4 to 5 Blade servers per rack, you use 24 kW to 32 kW per rack!! See Blade server cooling requirements table (click image for larger version).

Cooling and virtualization

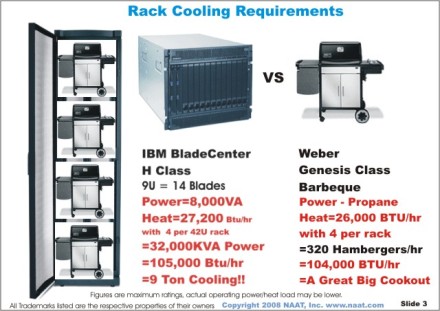

This concept has taken hold and is rapidly becoming the latest defacto computing trend. It has proven to work effectively and has many benefits. One of the many claims is improved energy efficiency, because it can reduce the number of "real" servers. Of course, along with the "upgrade" to a virtualized environment is the addition of new high performance, high-density servers. The server hardware takes less energy overall because there are fewer of them. However, the concentration of high-density servers in a much smaller space creates deployment problems. See Rack cooling requirements with Weber barbeque heat comparison (click image for larger version).

So if virtualization uses less space, and the servers use less energy overall, what is the downside?

Power requirements: Virtualizing the environment will use less server power when executed properly using fewer servers. However, many existing power distribution systems cannot handle providing 20 kW to 30 kW per rack.

Cooling requirements: It seems if virtualization is properly implemented it can use less space and power by using fewer and more dense servers. Thus, it should follow that they need less cooling. Therefore, virtualization should more be energy efficient and your data center is more "green" (ah, the magic "G" word).

So, this where the virtualization-efficiency conundrum manifests. As mentioned earlier, data centers that were built only five years ago were not designed for 10, 20, or even 30 kW per rack. As such, their cooling systems are not capable of efficiently removing heat from a compact area. If all the racks were configured at 20 kW per rack, the average power/cooling could exceed 500 W/square foot. Even some recently built Tier IV data centers are still limited to 100 W to 150 W per square foot average. As a result many high-density projects have had to spread the servers across half empty racks in order to not overheat -- lowering the overall average power per square foot.

Comparing cooling options

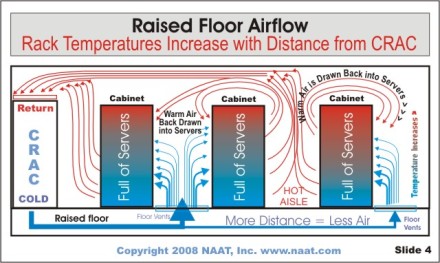

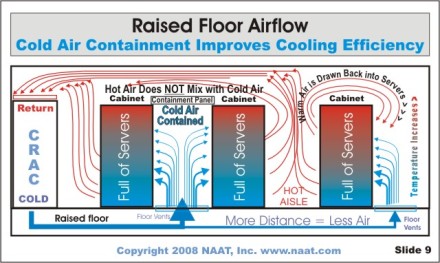

The "classic" data center harkens back to the days of the mainframe. It had a raised floor which served several purposes: it was capable of easily distributing cold air from the computer room air conditioner (CRAC), and it contained the power and communications cabling. While mainframes were very large, they only averaged 25 W to 50 W per square foot. Originally, it was designed with rows oriented facing the same to make it look neat and organized. In many cases the cold air entered the bottom of the equipment cabinets and the hot air exited the top of the cabinet. The floor generally had no perforated tiles. This method of cooling was relatively efficient as the cold air was going directly into the equipment cabinets and did not mix with the warm air. With the introduction of rack-mounted servers the average power levels began to rise to 35 W to 75 W per square foot. The cabinet orientation become a problem because the hot air now exited out the back of one row of racks in to the front of the next row. Thus the "Hot Aisle-Cold Aisle" came into being in the 1990s. CRAC units were still located mainly at the perimeter of the data center, but the floor tiles now had vents (or were perforated) in the cold aisles. This worked better and the cooling systems were upgraded to take care of the rising heat load by adding more and larger CRAC units with higher power blowers and increased size floor tile vent openings. See raised floor airflow diagram showing how rack temperatures increase with distance from CRAC (click image for larger version).

This cooling method is still predominately used in most data centers that have been built in the last 10 years, and in many that are in the design stage. Raised floors became deeper (2 foot to 4 foot are now more common) to allow cold air to be distributed using this "time-tested and proven" methodology. However, this is a cost-effective method only up to certain power level. Once past a certain power level this method has multiple drawbacks. It takes much more energy for the blower motors in the perimeter CRACs to push more air at higher velocities and pressures to deliver enough cold air into a single 2-foot by 2-foot perforated tile to support a 30 kW rack. These perforated floor tiles have even been replaced by floor grates in order to try to supply enough cold air to a rack that need a to of cold air to cool off high-density servers. (Note: Each 3.5 kW produces 12,000 BTUs of heat, requiring 1 ton of cooling). Unfortunately 3.5 kW/rack has been exceeded many times over with the advent of the "1U" and Blade server. Now, instead of specifying how many tons of cooling for an entire data center, we may now need 5 to 10 tons per rack!

As a result, the amount of power that is used for cooling high-density server "farms" has exceeded the power used by the servers.In some cases, for every $1 spent to power the servers, $2 or more is spent for cooling. This is primarily due to the path efficiency problem -- ideally, it should use less than half the energy to cool, not twice as much.

In some applications, the traditional raised-floor perimeter cooling system for high-density applications is causing an overall increase in energy use and inadequately cooling of a full rack of high-density servers.

Non-raised floor cooling: Some newer cooling systems have been placed in close proximity to the racks. This improves cooling performance and efficiency without a raised floor. These systems can be used as a complete solution or as an adjunct to an overtaxed cooling system.

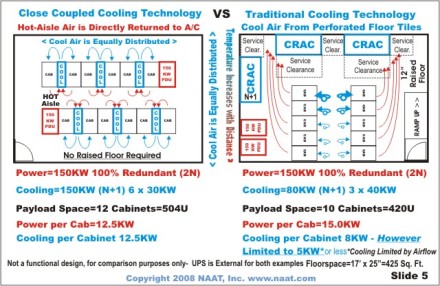

Close-coupled cooling advancements: Various cooling manufacturers have developed systems that shorten the distance air has to travel from the racks to the cooling unit. Some systems are "inrow" with the racks, and others are "overhead." They offer a significant increase in cooling racks at up to 20 kW/rack. With required supporting infrastructure, these systems provide significantly reduced cooling costs using less power to move air, and minimizing the mixing of the hot and cold air. See close coupled cooling technology versus traditional cooling technology diagram (click image for larger version).

Hot aisle containment requires that the "hot aisle" be sealed along with inrow-style cooling units. This ensures that all the heat is efficiently extracted directly into the cooling system over a short distance. This increases the effectiveness and efficiency of cooling high-power racks.

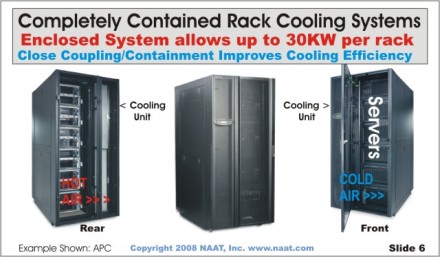

Fully-enclosed racks offer the highest cooling density. By having cooling coils within a fully-sealed rack you can cool up to 30 kW in a single rack. Some systems from major server manufacturers offer their own "fully enclosed" racks with built-in cooling coils that contain airflow within the cabinet. This represents one of the most effective high performance high-density cooling solutions to support standard "air cooled" servers at up to 30 kW per rack. It potentially offers the highest level of energy efficiency. See completely contained rack cooling systems diagram (click image for larger version).

Liquid-cooled servers: Today all servers use air to transfer heat out of the server. Several manufacturers are exploring building or modifying servers to use "fluid-based cooling." Instead of fans pushing air through the server chassis, liquid is pumped through the heat producing components of the server (CPUs, power supplies, etc). However, this technology is still in the testing and development stage. Moreover, because of the danger of liquids leaking onto electronic systems pose, there may be low acceptance and uptake. It is important to note that this method is different than using liquid (chilled water or glycol-based) for heat removal from the CRAC.

The good news is that some of the new cooling technology (inrow, overhead, and enclosed) can be added or retrofitted to existing data centers to improve the overall existing cooling, or they can be used only for specific "islands" to provide additional high-density cooling.

Best practice versus reality

One of the realities of any data center, large or small, is that computing equipment changes constantly. As a result, even the best planned data center tends to have new equipment installed wherever there is space -- leading to cooling issues. The necessity to keep the old systems running while installing new systems sometimes means that expediency rules the day. It is unusual to be able to fully stop and reorganize the data center to optimize cooling. If you can take an unbiased look at your data center (avoid saying "That's how it has always been done"), you may find that many of the recommendations below can make a significant improvement to your cooling efficiency -- many without disrupting operations.

Facilities and IT

Like the many other groups, IT people and facilities people do not see things the same way. Facilities personnel are usually the ones you call to address any cooling systems projects. They are primarily concerned with the overall cooling requirements of the room (in BTUs or tons of cooling), and the reliability of systems that have been used in the past. They leave the racks to IT and just want to provide the raw cooling power to meet the entire heat load, usually without regard to the different levels rack density. The typical response from facilities is to add more of the same type of CRAC that is already installed (if there is space), which may partially, but inefficiently, address the problem. However, some mutual understanding of the underlying issues is needed so that both sides can cooperate and optimize the cooling systems to meet the rising high-density heat load with a more efficient solution.

Simple low-cost solutions

for optimizing cooling in existing installations

Clearly the raised floor is the present standard and is not going to suddenly disappear. Several techniques can be implemented to improve the cooling efficiency of data centers dealing with high-density servers.

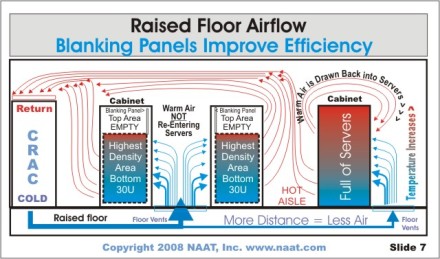

Blanking panels: This is by far the simplest, cost-effective and most misunderstood item that can improve cooling efficiency. Ensuring that the warm air from the rear of the rack cannot be drawn back into the front of the rack via open rack spaces will immediately improve efficiency. See raised floor airflow diagram showing how blanking panels improve efficiency (click image for larger version).

Cable management: If the back of your racks are cluttered with cables, chances are that it is impeding the airflow and causing the servers to run hotter than necessary. Make sure that the rear heat exhaust areas of the servers are not blocked. Also, cabling under the floor causes a similar problem. Many larger data centers have reserved 1 feet to 2 feet of depth under the floor just for cabling to minimize affect on airflow. Cables should be run together and tightly bundled for minimal airflow impact.

Floor tiles and vents: The size, shape, position, and direction of floor vents and the flow rating of perforated tiles affect how much cool air is delivered to where it is needed. A careful evaluation of the placement and the amount airflow in relation to the highest power racks is useful. Use different tiles, vents, and grates to match the airflow to the heat load of the area.

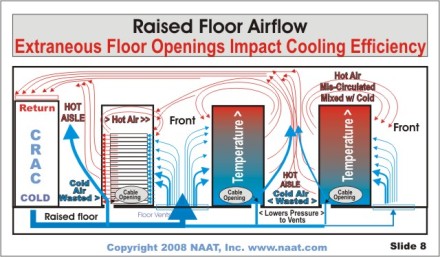

Unwanted openings: Cables normally enter the racks though holes cut into the floor tiles. This opening is a great source of cooling inefficiency because it wastes the cold air by allowing it to enter the back of the rack where it is totally useless. It also lowers the static air pressure in the floor reducing the amount of cold airflow available for the vented tiles in front of the rack. Every floor tile opening for cables should be surrounded by air containment devices -- typically a "brush" style grommet collar which allows cables to enter but blocks the airflow. See raised floor airflow diagram showing how extraneous floor openings impact cooling efficiency (click image for larger version).

Cold aisle containment: A recent development is the cold aisle containment system, which is best described as a series of panels that span the top of the cold aisle from the top edge of the racks. It can also be fitted with side doors to further contain cold air. This blocks the warm air from the hot aisle from mixing with the cold air and concentrates the cold in front of the racks where it belongs. See raised floor airflow diagram showing how cold air containment improves cooling efficiency (click image for larger version).

Temperature settings: It has always been the "rule" to use 68 to 70 degrees Fahrenheit as a setting point to maintaining the "correct" temperature in a data center. In reality, it is possible to carefully raise this a few degrees. The most important temperature should be measured at the intake of the highest server in the warmest rack. While each manufacturer is different, most servers will operate well at 75 degrees Fahrenheit at the intake, as long as there is adequate airflow (check with your server vendors to verify their acceptable range).

Humidity settings: Just as maintaining temperature is important, humidity is also maintained by the CRAC. The typical target set point is 50% humidity, with the hi-low range set at 60% and 40%. In order to maintain humidity, most CRACs use a combination of adding moisture and/or also "reheating" the air. This can take a significant amount of energy. By simply broadening your hi-low set points to 75% to 25%, you can save a substantial amount of energy (check with your server vendors to verify their acceptable range).

Synchronize your CRACs: In many installations each CRAC is not in communication with any other CRAC. Each unit simply bases it temperature and humidity setting on the temperature and humidity being sensed in the (warm) return air. Therefore it is possible (and even common) for one CRAC to be trying to cool or humidify the air while another CRAC is trying to dehumidify and or reheat the air. It can easily be determined if this is the case, and you can have your contractor add a master control system or change the set points of the units to avoid or minimize the conflict. In many cases only one CRAC is needed to control humidity, while the others can have the hi-low points set to a much wider range to be used as a backup, if the primary unit should fail. Resolving this can save significant energy over the course of a year, and reduce wear on the CRACs.

Thermal survey: A thermal survey may provide surprising results, and if properly interpreted can provide many clues to improving efficiency by using any or all of the mentioned items or methods discussed here.

House fans: This does not really solve anything, but I have seen many of these in many futile attempts to prevent equipment overheating. See why fans aren't really an option (click image for larger version).

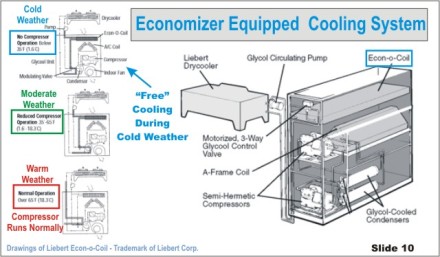

Economizer coils -- "free cooling": Most smaller and older installations used a single type of cooling technology for thier CRACs. It usually involved a cooling coil that was cooled by a compressor located within the unit. It did not matter if it was hot or cold outside, the compressor needed to run all year to cool the data center. A significant improvement was added to this basic system, a second cooling coil connected by lines filled with water and antifreeze to an outside coil. When the outside temperature was low (50 degrees Fahrenheit or less) "free cooling" was achieved because the compressor could be less used or totally stopped (below 35 degrees Fahrenheit). This simple and effective system was introduced many years ago, but was not that widely deployed because of increased cost and the requirement to have a second outside coil unit. In colder climates, this can be a significant source of energy savings. While it is usually not possible to retrofit this to existing systems, it is highly recommended for any new site or cooling system upgrade. The use of the economizer has risen sharply in the last several years, and in some states and cities it is even a requirement for new installations. This is primarily used in areas with colder climates. See economizer equipped cooling system diagram (click image for larger version).

Location and climate: While we have discussed many of the issues and technologies within the data center, the location and climate can have a significant affect on cooling efficiency.

A modern, large Multi-Megawatt, dedicated Tier IV data center is designed to be energy efficient. It typically uses large water chiller systems with built-in economizer functions (see below) as part of the chiller system. This provides the ability to shut down the compressors during the winter months and only uses the low exterior ambient air temperature to provide chilled water to the internal CRACs. In fact, Google built a super-sized data center in Oregon because the average temperature is low, water is plentiful, and low-cost power is available.

Not everyone operates in the rarified atmosphere of a Tier IV world. The tens of thousands of small- to medium-sized data centers located in high-rise office building or office parks may not have this option. They are usually limited to the building-based cooling facilities (or lack thereof). Therefore, in such installations, the data center is limited in its ability (or unable) to use efficient high-density cooling. In fact, some buildings do not operate or supply condenser water during the winter, and some do not have it at all. Often when the office floor plan is being laid out the data center is given the odd-ball space that no else wants. Sometimes the IT department has no say in its design. As a result, the size and shape may not be ideal for rack and cooling layouts. When your organization is considering a new office location, the ability of the building to meet the requirements of the data center should also be considered, not just how nice the lobby looks.

The bottom line

There is no one best solution to address the cooling and efficiency challenge, however by a careful assessment of the existing conditions a variety of solutions and optimization techniques can substantially improve the cooling performance of your data center. Some cost nothing to implement, while others have a nominal expense, but they all will produce a positive effect. Whether it is 500 or 5,000 square feet, if done correctly, your data center will improve in energy efficiency. It will also increase the uptime because the equipment will receive more cooling and the cooling systems will not be working as hard to provide the cooling. Don't just think about "going green" because it is fashionable. In this case it is necessary to meet the high-density cooling challenge, while lowering energy operating costs.

ABOUT THE AUTHOR: Julius Neudorfer has been CTO and a founding principal of NAAT since its inception in 1987. He has designed and project managed communications and data systems projects for commercial clients and government customers.