7 major server hardware components you should know

Even with software-based data center options, it's still important to know the physical components of a server. Check out these terms to refresh your memory.

Servers are the powerhouse behind every data center. These modular, boxy components contain all the processing power required to route and store data for every possible use case.

Depending on the size of the data center, organizations use blade, rack or tower servers so admins can scale the number of servers depending on need, effectively maintain the hardware and easily keep them cool.

Whether a data center uses rack, blade or tower servers, the central server hardware components stay the same and help support simultaneous data processing at any scale. Here’s a quick refresher on the basic components of a server and how they help get data from point A to point B.

1. Motherboard

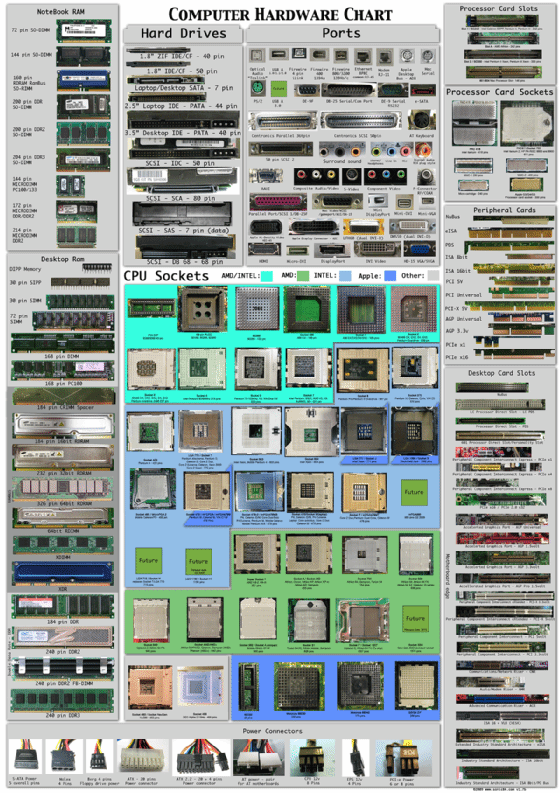

This piece of server hardware is the main printed circuit board in a computing system. As a minimum, the motherboard holds at least one central processing unit (CPU), provides firmware (BIOS) and slots for memory modules, along with an array of secondary chips to handle I/O and processing support, such as a Serial Advanced Technology Attachment (SATA) or Serial-Attached SCSI (SAS) storage interface. It also functions as the central connection for all externally connected devices and offers a series of slots -- such as PCIe -- for an array of expansion devices, such as network or graphics adapters.

A standard motherboard design includes six to 14 fiberglass layers, copper connecting traces and copper planes. These components support power distribution and signal isolation for smooth operation.

The two main motherboard types are Advanced Technology Extended (ATX) and Low-Profile Extension (LPX). ATX includes more space than older designs for I/O arrangements, expansion slots and local area network connections. The LPX motherboard has ports at the back of the system.

For smaller form factors, there are the Balance Technology Extended, Pico BTX and Mini Information Technology Extended motherboards.

2. Processor

The CPU -- or simply processor -- is a complex micro-circuitry device that serves as the foundation of all computer operations. It supports hundreds of possible commands hardwired into hundreds of millions of transistors to process low-level software instructions -- microcode -- and data and derive a desired logical or mathematical result. The processor works closely with memory, which both holds the software instructions and data to be processed as well as the results or output of those processor operations.

This circuitry translates and executes the basic functions that occur in a computing system: fetch, decode, execute and write back. The four main elements included on the processor are the arithmetic logic unit (ALU), floating point unit (FPU), registers and cache memory.

On a more granular level, the ALU executes all logic and arithmetic commands on the operands. The FPU is designed for coprocessing numbers faster than traditional microprocessor circuitry.

The terms central processing unit and processor are often interchanged, even though the use of graphics processing units means there can sometimes be more than one processor in a server.

3. Random access memory

RAM is the main type of memory in a computing system. RAM holds the software instructions and data needed by the processor, along with any output from the processor, such as data to be moved to a storage device. Thus, RAM works very closely with the processor and must match the processor’s incredible speed and performance. This kind of fast memory is usually termed dynamic RAM, and several DRAM variations are available for servers.

RAM is defined by its speed and volatility. RAM offers much faster for read/write performance than some other data storage types, and because it serves as a bridge between the OS, applications and hardware. RAM is also volatile and will lose its contents when power is removed from the computer. Because RAM is intended for high-performance temporary storage, the computer requires permanent or non-volatile storage for applications and data when the system is turned off or restarted.

RAM chips are typically organized and built into modules that follow standardized form factors. This enables memory to be added to a server easily or replaced quickly in the event of a memory failure. The most common form factor for DRAM is the dual in-line memory module, and DIMMs are available in countless capacities and performance characteristics. A typical server can contain hundreds of gigabytes of memory.

4. Hard disk drive

This hardware is responsible for reading, writing and positioning of the hard disk, which is one technology for data storage on server hardware. Developed at IBM in 1953, the hard disk drive (HDD) has evolved over time from the size of a refrigerator to the standard 2.5-inch and 3.5-inch form factors.

An HDD is an electromechanical device using a collection of stacked disk platters around a central spindle in a sealed chamber. These platters can spin up to 15,000 rotations per minute, and different motor heads control the read/write heads as they transcribe and translate information to and from each platter -- converting electronic 1s and 0s into magnetic patterns on the actual platters and vice versa.

Because the magnetic patterns of 1s and 0s remain indefinitely on the platters once power is removed from the storage device, disk drives have long been the fundamental non-volatile storage option for all computers. Disk drives communicate with the server’s motherboard using a standardized interface such as SATA, SAS or iSCSI.

Data center servers also use solid-state drives (SSDs), which replace spinning magnetic platters with non-volatile rewritable memory in a standardized disk drive interface -- such as SATA or SAS. The result is a storage device with no moving parts bringing low latency and high I/O for data-intensive use cases. SSDs are more expensive than hard disks, so organizations often use a mix of hard drive and solid-state storage in their servers to meet the unique performance demands of different workload.

5. Network connection

Servers are intended for client-server computing architectures and depend on at least one network connection to maintain communication between the server and a data center LAN. LAN technologies first appeared in the 1970s including Cambridge Ring, Ethernet, ARCNET and others -- though Ethernet is by far the dominant networking technology available today.

A network connection is primarily defined by its technology and bandwidth -- speed. Early Ethernet network adapters supported 100 Mbps speeds, though today’s Ethernet adapters can easily support 10 Gbps. Modern servers can easily support multiple network connections to support multiple workloads -- such as multiple virtual machines -- or trunk multiple network adapters together to provide even greater bandwidth for demanding server workloads.

Networks evolved to handle communication between applications, but storage traffic -- reading and writing data between applications and storage devices -- can demand significant bandwidth. Dedicated storage networks have such as Fibre Channel (FC), Fibre Channel over Ethernet (FCoE), InfiniBand and other storage networks are available to connect servers to data center storage subsystems.

A server’s network connection is created through the addition of a network adapter that can be included as a chip and physical port -- plug -- on the motherboard, as well as a separate network adapter plugged into an available motherboard expansion slot, such as a PCIe slot). A conventional network adapter and dedicated storage network adapters can exist on the same server simultaneously.

6. Power supply

All servers require power, and the work of converting AC utility power into the DC voltages required by a server’s sensitive electronic devices is handled by the power supply (PS). The PS is typically an enclosed subsystem or assembly -- box -- installed in the server’s enclosure. AC is connected to the server from a power distribution unit (PDU) installed in the server rack. DC produced by the power supply is then distributed to the motherboard, storage devices and other components in the server through an array of DC power cables.

Power supplies are typically rated in terms of power in watts and a typical server can use anywhere from 200-500 W -- sometimes more -- depending on the amount and sophistication of devices in the server. Much of that power is dissipated as heat that must be ventilated from the server. Power supplies typically include at least one fan designed to pull heat from the server into the rack where the heated air can efficiently be removed from the rack and data center.

Because a power supply powers the entire server, the PS is a single point of failure in the server. System reliability can be improved by using high-quality power supplies, over-rated power supplies -- capable of providing more power than the server actually needs -- and redundant power supplies where a backup power supply can take over if the main power supply fails.

Progressive server designs forego internal power supplies in favor of DC supplied throughout the rack using a common DC power bus. A blade-style server and chassis typically use this kind of approach, though more traditional server form factors are starting to use this design, which relies on a common power supply placed in the server rack or blade chassis.

7. GPU

Graphics processing units (GPUs) have traditionally been the realm of personal computers, but servers are beginning to use GPUs for complex and demanding mathematical operations needed with visualization, simulation and graphics-intensive workloads -- such as AutoCad. Similarly, the rise of virtual desktop infrastructure brings a need for graphics capabilities allocated to virtual desktop instances.

A GPU is a dedicated form of processor chip holding one or more graphics processing cores capable of sharing computational tasks driven by underlying graphics software. GPUs such as the NVIDIA M60 provide 4096 effective CUDA cores.

GPUs are often rated in terms of teraflops, which represent the GPU’s ability to calculate one trillion floating-point operations per second. When the GPU chip is incorporated onto a graphics adapter card, there are additional specifications, such as the number of frames-per-second and amount and type of dedicated graphics memory -- sometimes as high as 32 GB of GDDR6 memory -- separate from the server’s memory.

Servers typically incorporate GPUs through a graphics adapter card installed in one of the server’s available expansion slots, such as a PCIe slot. The graphics adapter can demand up to 300 W of additional power and requires a separate DC power connection from the server’s power supply. High power demands also produce significant heat, which requires the use of at least one cooling fan on the graphics adapter. The sheer size of a server-class graphics adapter can limit the number of expansion slots available on the server.